At Stacktics, we are working with data constantly. This includes performing ETLs, moving data from A to B, providing dashboard style real-time analytics, and using machine learning to derive insights from large scale data. Working in this environment means staying on top of our game regarding what is available to our clients, in order to best streamline these types of processes. With this in mind, let’s take a look at Google Cloud Composer – a managed service to address these needs.

What is Google Cloud Composer?

“Google Cloud Composer – or Composer for short – is a fully managed workflow orchestration service that empowers you to author, schedule, and monitor pipelines that span across clouds and on-premises data centers.” (Google Cloud Composer definition)

“What does that mean for me?”

Basically, Google Cloud Composer is a way of managing recurring tasks. For example, if I have a client looking to automate ingestion of data from A to B every day, I can use Composer to run that pipeline at any time interval of my choosing. This becomes very powerful when tasks start running concurrently and/or synchronously. Imagine trying to run 100 different pipelines using cron jobs.

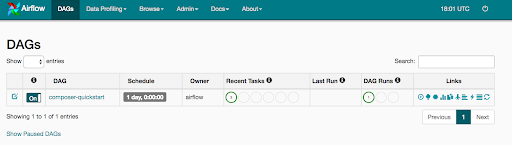

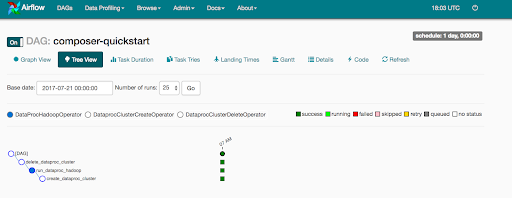

Google Cloud Composer is built on Apache Airflow making it a very useful UI out-of-the-box. This UI grants full control over your pipelines and also allows you to view logs, source code and health status of your pipelines. If you ever had to SSH into servers to check logs, you will instantly love this easy-to-use feature.

Airflow vs. Composer?

So, what sets Composer apart from Airflow? A big one is that Composer is managed – this is coming from someone who has used both Airflow and Composer. Setting up Airflow can take time and if you are like me, you probably like to spend your time building the pipelines as opposed to spending time setting up Airflow. The winning factor for Composer over a normal Airflow set up is that it is built on Kubernetes and a micro service framework. Kubernetes allows you to distribute your tasks across multiple computers through containers. This is huge as you are no longer limited to having just one machine. Composer being designed to run using Kubernetes is a huge draw for me personally.

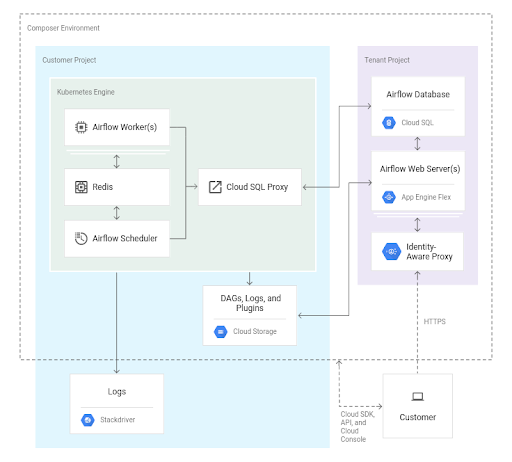

A brief overview of Cloud Composer Architecture

Below is an overview of how Composer works under the hood. I try not to go too in depth; my intent is to give an overview of what each component does. If you find this section too technical or are just exploring the potential for Composer, feel free to skip ahead with this takeaway: the beauty of Composer is that it deploys and maintains this environment for you.

Although Google’s managed services are amazing as the DevOps and others are handled for you, it is always good to know how they work under the hood to truly comprehend their full power and limitations. Coming from a technical background, I appreciate the ability to implement the same architecture outside of managed services as we are required at times to work in both on-premise and cloud environments

Now, let’s get into the components of Composer.

Tenant Project

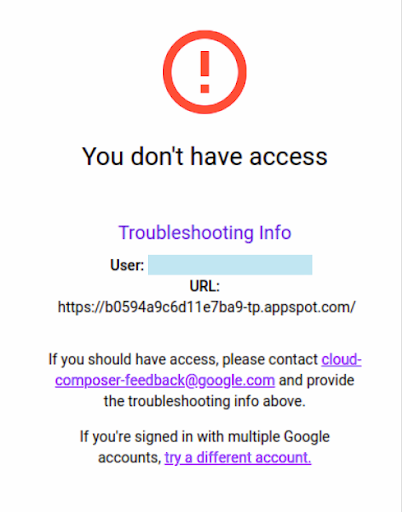

To unify IAM access control and to provide an additional layer of security, App Engine and Cloud SQL are deployed in the tenant project. This is very useful as you control access to not only the resources in GCP but also the website without worrying about firewall rules. A self managed web server deployment is also available if you require IP-whitelisting as opposed to IAM authentication.

Cloud SQL:

- Cloud SQL stores Airflow Metadata

- Database access limited by default to default or specified service account

- Backed up daily

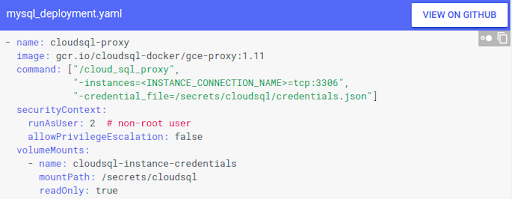

- To access outside this service account use Cloud SQL proxy in the Kubernetes cluster

App Engine:

- App Engine flex hosts the web server

Cloud Storage:

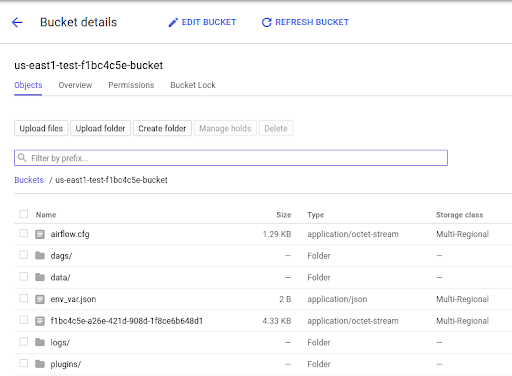

- A storage bucket is automatically deployed for you to submit your dags and code. These folders are then synchronized across workers (each worker is a node in the Kubernetes cluster).

Google Kubernetes Engine:

- Core components such as the scheduler, worker nodes and Celery executor live here.

- Redis is the message broker for the CeleryExecutor used under the hood and ensures messages persist across container restarts.

Stackdriver:

- Composer is integrated with Stackdriver for monitoring and logging. This enables you to view your logs in one central place – this is very useful if you are running a 10-node cluster.

Extra notes

We work a lot with big enterprises and security teams. An advantage of using Composer is that its Cloud SQL database is only available through private IP addresses or by using the CloudSQL proxy due to the application running on Google Kubernetes Engine. This is such a great feature by Google because no traffic will be exposed to the internet. Regardless, if you are interested, here is how you can add in the CloudSQL proxy:

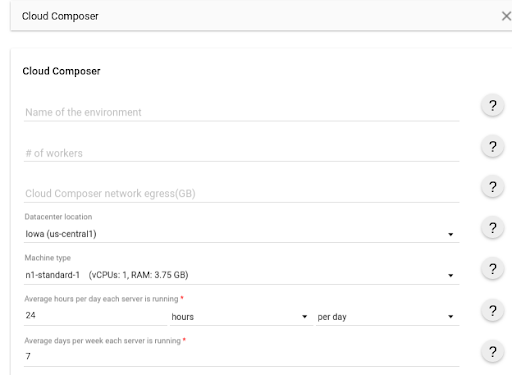

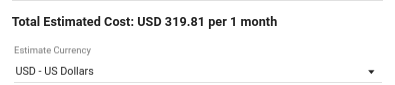

Pricing

This is the sticking point as compared to installing your own database locally on a server, and running Airflow in Composer is expensive. But then again, everything is expensive compared to that. The expense mainly comes from having to run a database and a 3 worker node (minimum) cluster consistently. The only suggestions I have for Google are to allow the worker nodes to scale to zero or one and allow specifying times to turn off the whole instance automatically. For example, if I only run jobs during the night, I would like to be able to only run the instance for the night and then turn it off so that I can manage my costs better.

Conclusions

Although disappointing that Composer does not have the ability to scale to zero, the features are impressive. If Airflow is needed for our client projects, I will seriously be considering Composer as my go-to for projects based on scope. The main reason for this is that setting up many custom Airflow environments in GCP and elsewhere, as well as maintaining them, can be heavily time consuming. The fact that this is set up by default to use Kubernetes, thereby allowing you to create a robust scalable scheduling environment in minutes, is simply too powerful to ignore. This should be taken into serious consideration when deciding on what architecture to use. Finally, I would say that if you are only looking for a simple, cheap Airflow environment, it may be worth deploying your service by yourself. However, if you are looking for a scalable, powerful solution that requires little DevOps from you besides deploying your dags and code to a Google Cloud Storage bucket, Composer is definitely the solution for you.